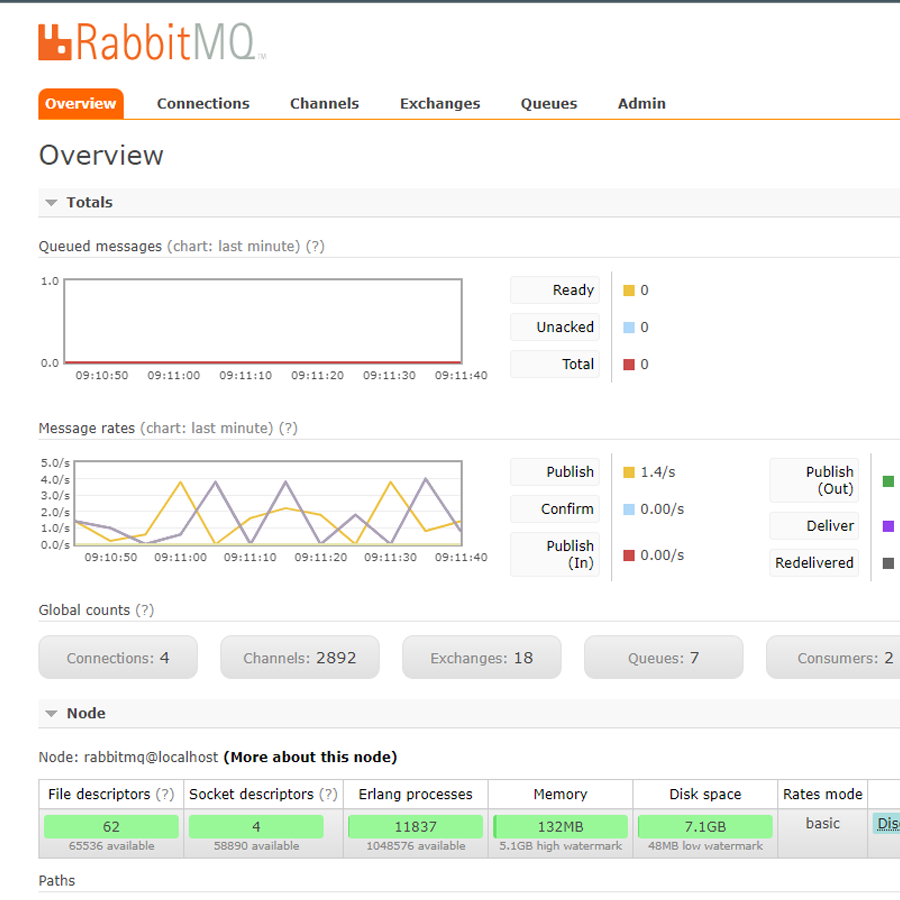

Incoming messages in RabbitMQ

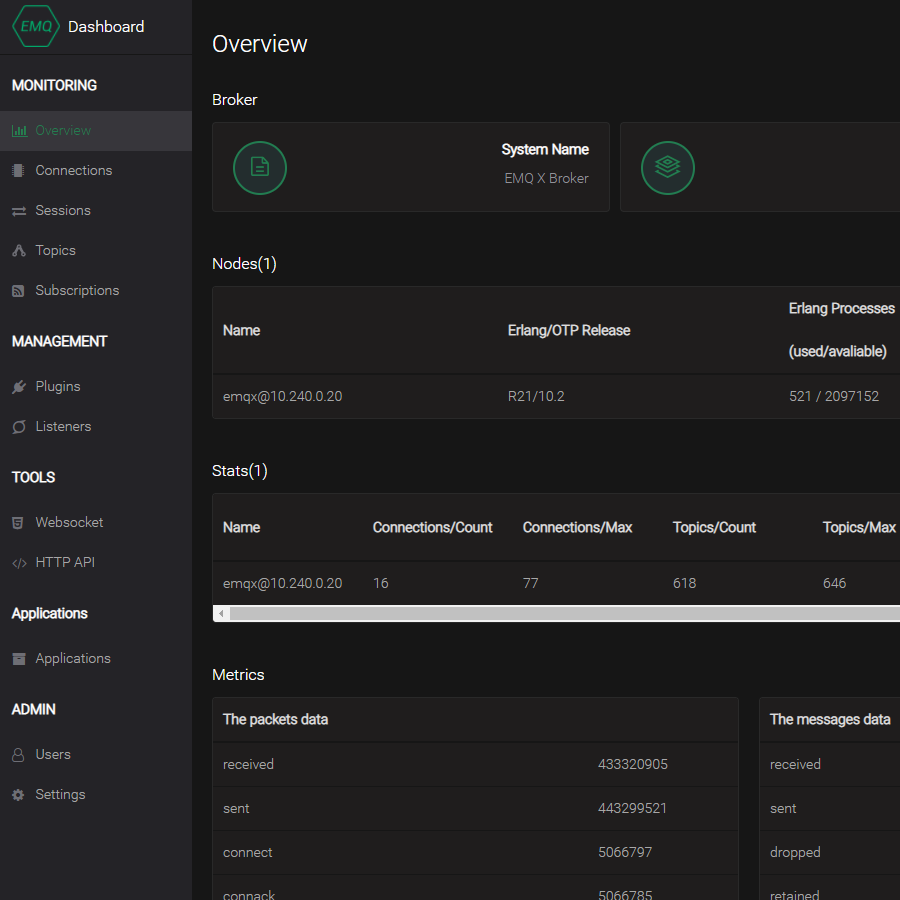

The MQTT dashboard

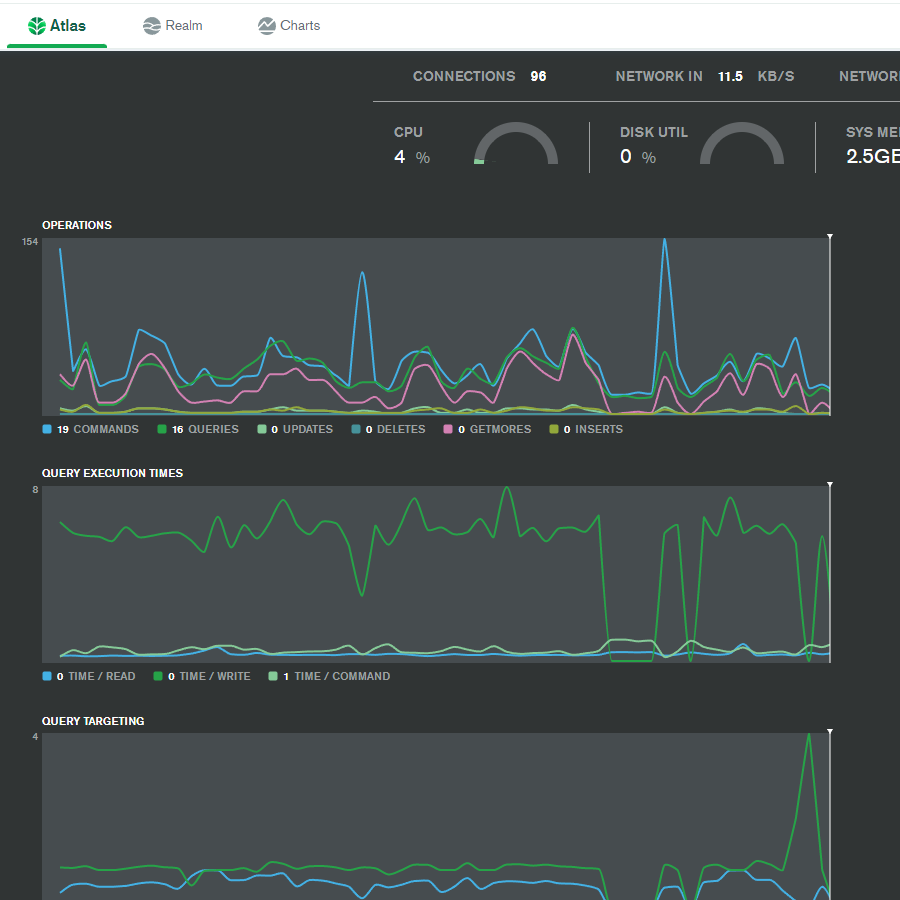

MongoDB Atlas activity

IIoT Stack

| The Client: | Digital Water Solutions Inc. |

| The Role: | Engineering & UX Design |

| The When: | 2016-2021 |

The Overview

DWS makes a smart hydrant insert that can detect leaks, physical tampering and water temperature. The inserts are installed into existing hydrants and the hydrants remain in service once installed. The sensors (pressure, hydrophone, temperature) are in a stainless steel enclosure below the hydrant valve allowing constant monitoring of the municipal water system. I designed & coded the system to receive, process and store telemetry as well as the RESTful API. I also setup and maintained the hosting infrastructure.

TL;DR

API Design & Documentation

The RESTful API was documented using RAML. The raml2html utility was used to create the HTML version of the documentation (link to documentation provided above). The endpoints generally match the database collections with some enhancements to enforce some important business rules. As with most API designs, a good balance was achieved to assure great performance and ease of use.

Tech Stack

The challenge with IoT telemetry is not only the amount of data that flows into the system, but also the fluctuation in data flow. Hydrants first send data to an MQTT broker which has a set of auto-scaling workers that subscribe to the hydrant topics, decode the CBOR data and then re-queue to a RabbitMQ broker (note: a REDIS key store is used to persist multi-part data). The auto-scaling RabbitMQ workers handle writing the telemetry data to the MongoDB database and handling any alerts (Twilio is used for SMS and email alerts) that might be triggered. The load balanced API is written in NodeJS and the processes are managed with PM2. API endpoints that request telemetry data act only as a middle-man - data is queried and is streamed to the caller with no processing allowing for fast response times. The workers and the API are hosted on GCP.

The Workers

There are two sets of workers used to process incoming telemetry. The first set processes the telemetry data coming in from the hydrants. Each data point sent to the MQTT server has a unique topic that includes the hydrant ID and the sensor type and all telemetry data is encoded with CBOR. Messages can be single part or multi-part. Single part telemetry is decoded into the JSON document layout required for database insertion and is re-queued to a RabbitMQ broker. Multi-part telemetry happens when a lot of data is sent by a hydrant (like a pressure event). Since the workers are stateless, each part is sent to a REDIS server and once the last message is received, the worker assembles all of the parts into once big CBOR payload and then decodes and re-queues each data point into the RabbitMQ broker (this can be hundreds). There are two RabbitMQ brokers. The first one simply subscribes to the queue that has the documents that need to be inserted into the database. This worker is very simple - it takes the unaltered JSON document and performs an INSERT into the MongoDB collection. The second worker checks to see if telemetry values exceed configured mix/max values and it sends out an alert via Twilio when they do. The workers are written in NodeJS, they are hosted as auto-scaling instances on GCP and they are stateless.